Social robot

Anthropomorphic social robot - modern human-computer interface. The robot is a physical avatar for the chatbot program and voice imitator. The combination of robot components with a chatbot and voice imitator makes the avatar hear what we ask it about and respond in Polish, generating the tone of voice and the way of speaking of the chosen person. The camera mounted in the robot allows to recognize objects in the environment. The robot is a contribution to the next generation of social robots that I work on. The robot bust can be used in places and situations where contact with other people is needed - in museums, galleries, concerts... After uploading mindfiles, the avatar can represent our behavior in the future, so that, for example, our grandchildren will see what we were like in the past.

Nowadays robots are more and more often accompaning us in our lives. The concept of creating something in the image and likeness of man is not new. In ancient Greece such myths were already created, e.g. about Talos (Τάλως) - huge bronze, guarding Crete. The aim of my work was to create a robot - a physical avatar improving the communication between computer and a human.

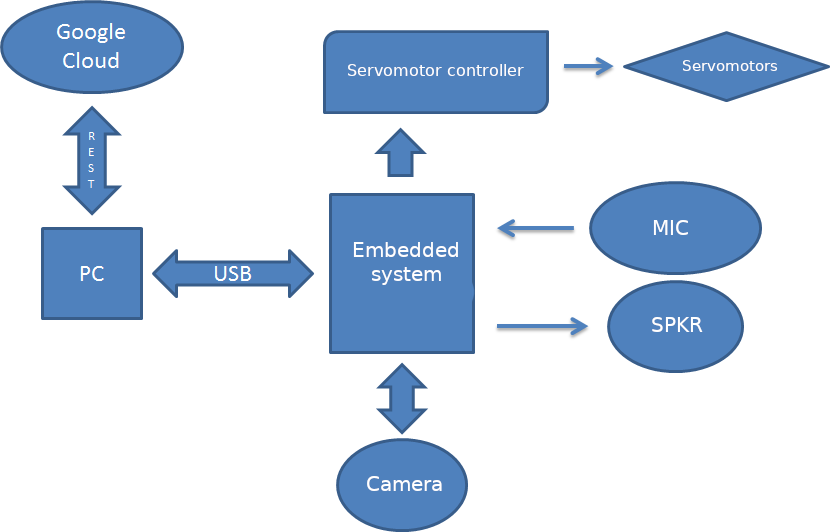

Mechanical construction is a combination of metal and plastic elements, including those printed with a 3D printer. The skull is coated with a silicone cast head, which is set in motion by epoxy elements mechanically connected (using, among others, lines) with servomechanisms. They are controlled by an embedded system based on AVR microcontrollers. This system is connected to a computer, where there is an application processing robot signals, generating avatar movement sequences and communicating with the cloud.

The basis of the mechanical construction is a metal shaft, which fixes the head to a plastic base. It is embedded in a ball joint that allows the neck to rotate in three axes. The control of the neck (position of the joint) is carried out by 2 commutator motors, cooperating with a toothed helical gear, which is used to transfer the drive, increase the torque and move the toothed bar.

The skull of the robot has been equipped with the following servo mechanisms:

- jaws,

- eye movement up / down,

- the left/right movement of the eyeballs,

- two eyelid servos,

- eyebrows.

Other servos that move:

- the upper part of the mouth,

- left part of the mouth,

- right hand side of the mouth,

- the left eyebrow,

- right eyebrow.

The servo controller is based on 8-bit AVR microcontrollers. They are clocked with an external quartz resonator with a basic frequency of 8MHz. The following types of servo motors were used in the project:

- jaw (x1): TowerPro MG-946R analog servo, torque: 13 kg*cm, speed: 0.17s/60,

- eyes up / down (x1): TowerPro MG-90D digital servo - micro, torque: 2.4 kg*cm (0.23 Nm), speed: 0.08 s/60°,

- left/right (x1) knobs: TowerPro MG-90D digital servo - micro, torque: 2.4 kg*cm (0.23 Nm), speed: 0.08 s/60°,

- eyelids (x2): TowerPro MG-90D digital servo - micro, torque: 2.4 kg*cm (0.23 Nm), speed: 0.08 s/60°,

- eyebrows (x2): analogue servo Feetech FR5311M, torque: 14.5 kg*cm (1.45 Nm), Speed: 0.13 s/60°,

- upper mouth (x1): PowerHD HD-8307TG digital servo - giant, torque: 8.5 kg*cm (0.84 Nm), speed: 0.08 s/60°,

- left and right part of the lips (x4): TowerPro MG-946R analog servo, torque: 13 kg*cm, speed: 0.17s/60,

- left and right brew (x2): TowerPro MG-946R analog servo, torque: 13 kg*cm, speed: 0.17s/60,

- arms (x2): Feetech Wing digital servo FT3325M - micro, torque: 7.21 kg*cm (0.71 Nm), speed: 0.13 s/60°

and commutator motors for neck control.

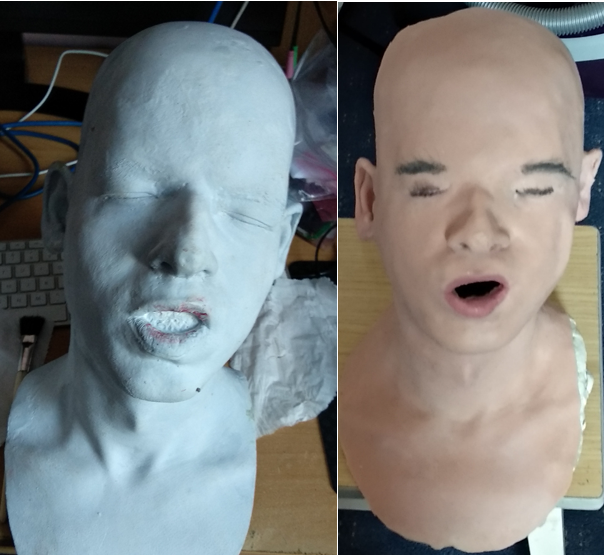

The die-cast head and silicone mask are shown in the following figure. The mask is a negative of a head model made of moulding sand. The skull with the base on which the mask is placed is a combination of its own components printed with a 3D printer or cast in epoxy resin and factory parts from dolls and toys owned by the author.

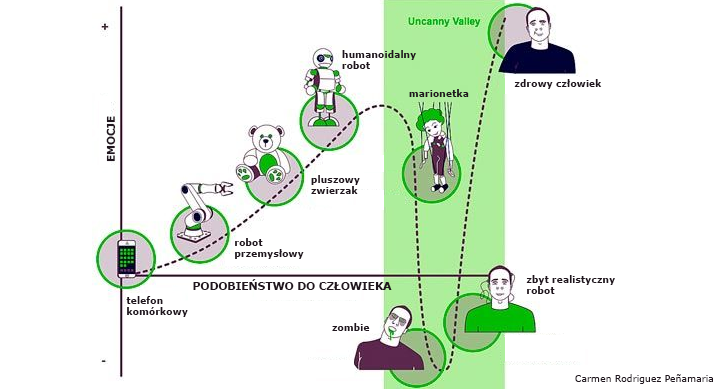

Research shows that the psychological comfort of an observer facing a robot depends on the degree of similarity between the robot and the human being, as shown in the figure below. This hypothesis is called the valley of the uncanny and assumes that the more similar the robot is to a human being, the more pleasant it seems. However, this happens only until a certain point in time, when the mental comfort of the observer drops sharply. After passing another point on the chart, the mental comfort increases again - then the robot becomes indistinguishable from the human being. I tried to bear this in mind when designing the system.

To inform the user about the emotional state of the robot, a digital, addressed RGB WS2813 LED bar, connected to the servo control module, was used.

The program running on a PC was written in JAVA language, in NetBeans environment. The Apache Maven tool was used to automate software development. The task of the application is:

- sending the robot modules sequences controlling the servo mechanisms,

- processing camera data and object recognition in the environment,

- communication with Google Cloud for the purpose: speech recognition (STT) and speech generation (your own TTS, described in the Projects tab),

- answering questions asked to the robot - a chatbot (AIML + SEQ2SEQ model based on recursive neural networks) has been implemented.

The REST API was used to communicate applications with the cloud (virtual machine instance in Google Cloud; 100GB hard disk, RAM 60GB, 2 NVIDIA Tesla K80 graphics cards).

Face detection and recognition is based on the OpenCV library. The OpenCV library is a set of programming functions used when performing operations on images. It is based on open code, and is available on multiple platforms. The library was created in C language, but there is an implementation for Java, which is used in this system. The library version 2.4.11 was used in this work. The library uses Haar cascade classifier for facial detection and LBPH (Local Binary Patterns Histograms) algorithm for recognition of local histograms.

One of the main functionalities of the system is the recognition of types of objects other than the face, which are in the environment. This allows the robot to orient itself in the environment and comment on them. Deep neural networks, created with the use of the MXNet library, which allows to design, teach and implement deep neural networks on a wide range of platforms - from cloud systems to mobile devices. Provides a high degree of scalability and faster learning of neural models. Using the library, a deep neural network was created, which was taught with the use of a learning collection separated from the ImageNet database. The number of classes in the learning set is 1000. The learning was conducted on a computational cluster equipped with GTX 980 cards. The effectiveness of the classification of the trained model is 78% for data from the validation set, which was created by randomly separating about 20% of the learning set.

Two chatbots work in the system. The first one uses a knowledge base containing keywords assigned to previously defined answers. The AIML tag language was used to create the knowledge base. The system also uses a chatbot, based on recurrent artificial neural networks. Chatbot SEQ2SEQ consists of 2 components - encoder and RNN decoder, which is described in the article about chatbot in the Projects tab.

The speech synthesis system does not use a standard speech synthesizer of the Ivona type. The author wanted the synthesized voice to be as natural as possible and to resemble with its sound the voice of a chosen person. In the example, an attempt was made to reproduce the sound of the author's voice. The system is based on an innovative speech synthesis technique based on deep weave neural networks (CNN), dealing with the processing of sequential data such as speech. In order to train the constructed weave neural network, a learning sequence consisting of 20 hours of voice recordings and a corresponding transcription of the words to be read has been prepared.

The diagram below presents a block diagram of the presented system.

Used technologies: DCN, LSTM, AIML, Haar, MXNet, OpenCV, Tensorflow, Google Cloud Instance, Maven, JAVA, C, Python...